The use of medical imaging in clinical practice has increased dramatically in recent decades, but there are still many challenges related to data storage and management. These happened in real-world scenarios. To address this issue, BMD proposes a scalable architecture that allows scalability, distribution and the intelligent management of medical imaging services.

Medical images of the DICOM format are typically characterized by high spatial resolution, and in some medical fields, such as breast tomosynthesis or clinical pathology, can easily need gigabytes of volume per study. Due to these complications, these will require centralized storage systems with high capacity.

At BMD, we have been deploying global scale medical imaging platforms in distinct contexts, with one of our pieces of software being Dicoogle (www.dicoogle.com), being a DICOM image indexer and search tool for fast querying of medical imagery. For the purpose of better enabling the Dicoogle service, it was proposed a scalable management architecture, which allows for partitioning and replication of medical images in an intelligent manner and according to pre-established replication policies by the institutions that utilize the system. The solution is based on Kubernetes (often abreviated as “K8S”), an open-source machine cluster manager to deploy, scale and manage virtualized applications anywhere.

Architectural requirements and proposal

This architecture was designed to respond to the following requirements:

- Scalability – The architecture must keep track of metrics published by the application to adjust its replication accordingly to distribute higher request loads or heavy processing between the replicas, allowing for the system to continue with good response times for its users.

- Distribution – The system must be distributed by multiple machines so that there is redundancy and load balancing, whether it is for the main application or for a storage system.

- Intelligence – Implementation of adjustment automation in the architecture that matches policies and rules stipulated by the institutions that use it.

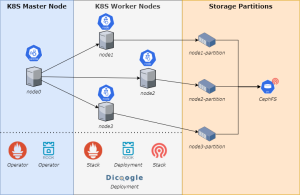

Pictured above is a proof-of-concept deployment of the proposed architecture. The Kubernetes cluster is composed of a group of four machines, one being the master and the remaining three as workers, for there to be a distribution of the services by default in all machines.

The proposed architecture consists of the previously mentioned Kubernetes architecture, which itself contains Dicoogle, which is being distributed across three working nodes. Other software contained in Kubernetes that further enables Dicoogle are:

- Prometheus – extracts contextual data regarding the metrics and behavior of Dicoogle, which can be used to automatically adjust Dicoogle’s replication.

- Ceph – A highly configurable storage solution able to store data in a distributed and redundant manner. Access to data in this storage system can be modified according to specified rules and configurations.

Evaluation of the system

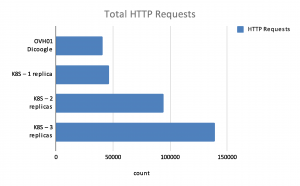

A load test that puts this architecture in heavy usage was designed using the open-source testing tool K6. Two test scenarios were in mind when developing this load test:

- Validate our distributed, replicated K8S architecture over a monolithic, single-server solution;

- Justify the usage of Ceph over a more standard NFS storage.

The load test itself consists of the creation of virtual users in a slow ramp up from one to one hundred virtual users, each applying a constant heavy usage of the site by downloading DICOM images for twenty minutes.

The results reveal that our distributed architecture with one replica has transmitted medical images and processed HTTP requests at a near-identical performance to the monolithic Dicoogle, being slightly better. The inclusion of more replicas increases the capacity for the K8S architecture to respond to requests and transmit more data by roughly 100% from one to two replicas, and 50% from two to three replicas.

Conclusion

The solution makes use of state-of-the-art Cloud technologies and commonplace protocols, concretely the Kubernetes ecosystem that allows automation of operational tasks based on containerization of applications.

Dicoogle open-source framework was used as a vendor-neutral archive application. It was extended through a developed plugin to allow services requested by the proposed architecture based on Kubernetes. The scalability of the system is implemented according to service metrics provided by a Dicoogle interface.

The partition and replication of medical studies at Dicoogle are implemented through the integration with the Ceph storage framework. The distributed storage policies can be managed by the Dicoogle information system, and every instance of this application in the network can provide access to all repositories according to access rules and programmed service latency. For instance, the examinations from one institution can be replicated in all data centers, and the incoming from another only stored in the regional data center.

This article was accepted for publication in the 2021 IEEE International Conference on Bioinformatics and Biomedicine, in particular for the Fifth Edition of Workshop Processes and Algorithms for Healthcare and Life Quality Improvement.

Read the full results and research of this evaluation in the full article, available here.